Build a highly scalable cost optimisation service

Introduction

NashTech helped SQream build a highly secured, performant, extensible compiler frontend service that successfully optimised all queries, reducing the cost of running a query by 60%.

SQream provides an analytics platform that minimises Total Time to Insight (TTTI) for time-sensitive data, on-prem and on-the-cloud. Designed for tera-to-petascale data, the GPU-powered platform enables enterprises to rapidly ingest and analyse their growing data – providing full-picture visibility for improved customer experience, operational efficiency, and previously unobtainable business insights.

Impact

- Build a cost-based optimiser for the existing database engine and integrate it with an industry-standard SQL parser.

- Bring down the cost of running a query by 60% by selecting the best plan based on the SQream representation of the data.

Challenges

- SQreamDB is a distributed database and it is quite complex to model the costs of query execution.

- Need of an industry-standard SQL parser/validator which can be enhanced to keep up the pace with the competitors.

- The backend engine needs to execute queries efficiently on huge volumes of data.

- A particular complex SQL can generate many query plans so the cost calcutaion of each and every query plan is expensive, hence there should be a balance between the query cost and cost of selecting the best plan.

Approach

SQream DB has a Rule-based query optimiser, but it is impossible to correctly optimise the query without considering the data, data skewness, workloads, and the variety of data. The rule-based optimiser applies different rules to optimise the query, so to cover all the plans, more rules are needed, which results in many complex rules as well as we have to add hints to make those rules work. Still, the required query efficiency can not be achieved for complex queries. Hence, the idea of Cost-Based Optimisation looks lucrative, but it is a hard problem to solve in distributed databases. Since the need was urgent, we narrowed it down to Calcite which has a lot of inbuilt rules, as well as a cost-based planner known as Volcano. We wanted to leverage as much functionality from Volcano to enable us to build a Cost-Based Optimiser for SQream DB. Calcite offers you a framework to build your own rules as well the framework is highly customisable to support all the needs that we have.

Solution

The primary goals for us were to:

- Integrate Apache Calcite as a frontend to optimise query execution plans.

- Customise Apache Calcite to produce the query plans that SQream can understand keeping in mind the underlying storage structure.

- An evolving parser that can easily support new SQL types, statements, and operators.

- Custom optimisation rules based on business logic e.g. merge sort on distributed sorted data.

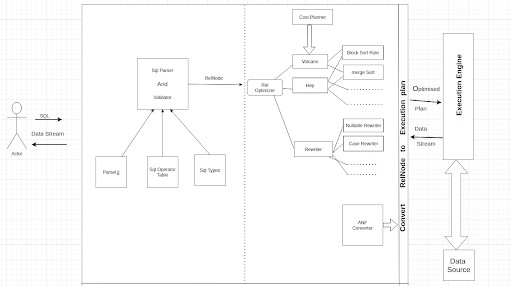

To achieve these goals the following High-Level Architecture was proposed to build a highly scalable cost optimisation service as described in the diagram below. To achieve these goals the following High-Level Architecture was proposed to build a highly scalable cost optimisation service as described in the diagram below.

01 Parsing

The whole process starts with parsing. A query, to be understood by the database engine, must first be parsed using an SQL parser, which takes a string of characters and tries to deduce its syntactic structure in the form of a parse tree. It uses a set of syntax rules called language grammar, which defines how an SQL query must look to be considered valid and acceptable to the query engine.

For instance, a rule for parsing SQL SELECT statements might look like this:

select:

<SELECT> expressionList

[<FROM> table]

[<WHERE> condition]

[<GROUP> <BY> groupingList]

[<HAVING> condition]

It declares that a SELECT statement must start with the keyword SELECT, followed by a list of fields and/or expressions to select (could be only one), and optionally any, or all, of the following: a FROM clause specifying the source of data from which to do a select (i.e. a table or a subquery); a WHERE clause filtering selected rows based on a Boolean condition; a GROUP BY clause aggregating rows together based on some keys; and a HAVING clause filtering out some groups if a condition isn’t satisfied.

02 Validation

Another important purpose of validation is to correctly identify to which exact object (field, table, function) any identifier named in the query really refers and also to assign correct data types to every field, row, or expression that therein occurs.

So how we do the validations:

- For validations, we create our schema with the help of AbstractSchema class and extend Apache Calcite’s AbstractSchema class to define our schema. And validate the query

- Once the validation is passed then we Got SqlNode (SqlNode is a SQL parse tree. It may be an operator, literal, identifier, and so forth

- Once we get the SqlNode now the next step is to generate our query as a tree structure because we know every query is represented as a tree

03 Regional algebra

Relational algebra deals with abstract transformations over sets of data, such as.

- Selection: Filtering based on a predicate.

- Projection: Choosing and modifying some columns of a row.

- Union: Combining several row sets into one.

- Aggregation: Computing a scalar function over a set of rows.

Conversion to a Relational Tree:

AST(abstract syntax tree) is not convenient for query optimisation because the semantics of its nodes are too complicated. It is much more convenient to perform query optimisation on a tree of relational operators, defined by the RelNode subclasses, such as Scan, Project, Filter, Join, etc. We use SqlToRelConverter, another monstrous class of Apache Calcite, to convert the original AST into a relational tree.

04 Query optimisation

Optimisation is a process of conversion of a relation tree to another relational tree. You may do rule-based optimisation with heuristic or cost-based planners, HepPlanner and VolcanoPlanner respectively. You may also do any manual rewrite of the tree without a rule. Apache Calcite comes with several powerful rewriting tools, such as RelDecorrelator and RelFieldTrimme.

- Typically, to optimise a relational tree, you will perform multiple optimisation passes using rule-based optimisers and manual rewrites

- We used VolcanoPlanner to perform cost-based optimisation. In the end, a well-optimised physical execution plan is passed on to the query execution engine whose job is to realise it and produce the desired results

Results

- Improved DB performance by at least 30% for all queries by providing much-optimised execution plans. For complex queries, the optimisation was more than 300% as CBO removed all the redundant and repetitive operations from the SQL

- Reduced the overall build and deployment time by more than 50% by separating these concerns from the DB engine

- Enabled integration into the Scala ecosystem by writing Sbt tasks and plugins. This allowed them to leverage tools and frameworks to achieve high concurrency and fault tolerance

The result was a highly secured, performant, extensible compiler frontend service that successfully optimised all the queries and generate the corresponding physical tree.

“I want to thank NashTech for their great contribution in building the CBO for SQream enabling us to successfully integrate calcite into our system.”

Gill Cohen – SQream Project Manager

Read more case studies

Confident modernisation through the iPoint-NashTech partnership

NashTech implemented automation testing to overcome maintenance and management challenges associated with former NettingEQ service infrastructures and codebase.

VietinBank and NashTech – Award winning digital transformation with ‘Genie’ AI Chatbot

VietinBank, one of Vietnam’s largest and most prestigious banks and part of the nation’s “Big 4”, partnered with NashTech to drive digital transformation through the implementation of an advanced AI...

Building a long-term partnership with SLR Consulting

We worked with SLR to deliver a range of digital solutions including a sustainable digital business card platform, a smart room booking system for desks and parking, an automated supplier onboarding...

Let's talk about your project

- Topics:

Our partnerships